Maplibre on Android Auto

Nov 16, 2025 · 10 minute readcode

android

Companies not using Google Maps often use MapLibre for maps, and, on mobile specifically, maplibre-native. The libraries work well on mobile today, and there is now a nice kmp compose wrapper for it as well.

How do we render a MapLibre map on Android Auto? Turns out there are a few different ways to do so. I will talk about the 3 different approaches we tested in our app, along with the performance implications of each.

Integration with Android Auto

Android Auto gives navigation apps access to a Surface that they can draw the map on. Access is granted via a setSurfaceCallback method, which has callback methods for onSurfaceAvailable() and onSurfaceDestroyed().

Texture Renderer

When searching for how to run MapLibre on Android Auto, we find a MapLibre-Android-Auto-Sample project within the MapLibre org on GitHub. From the README.md:

To get the MapView to render on the surface, we do the following; We render the MapView offscreen, create a ‘screenshot’ of this view. And we’ll draw this Bitmap to the Surface via a Canvas. Repeat the process 30 times per second, and you’ve got a working MapView on Android Auto.

We can see this by looking at the onCreate of the lifecycle within CarMapContainer. Here’s a shortened version of it:

override fun onCreate(owner: LifecycleOwner) {

MapLibre.getInstance(carContext)

runOnMainThread {

mapViewInstance = createMapViewInstance().apply {

carContext.windowManager.addView(

this,

getWindowManagerLayoutParams()

)

onStart()

getMapAsync { /* setup logic */ }

}

}

}

private fun createMapViewInstance() =

MapView(carContext, MapLibreMapOptions.createFromAttributes(carContext).apply {

// Set the textureMode to true, so a TextureView is created

// We can extract this TextureView to draw on the Android Auto surface

textureMode(true)

}).apply {

setLayerType(View.LAYER_TYPE_HARDWARE, Paint())

}

Note that the implementation intentionally enables textureMode, which, as the javadocs for the method state:

Since the 5.2.0 release we replaced our TextureView with an android.opengl.GLSurfaceView implementation. Enabling this option will use the android.view.TextureView instead. android.view.TextureView can be useful in situations where you need to animate, scale or transform the view. This comes at a significant performance penalty and should not be considered unless absolutely needed.

This is, however, necessary in order to draw on the surface. Finally, the implementation connects the surface callback for Android Auto, and, when the surface is available, sets up the rendering:

override fun onSurfaceAvailable(surfaceContainer: SurfaceContainer) {

Log.v(LOG_TAG, "CarMapRenderer.onSurfaceAvailable")

this.surfaceContainer = surfaceContainer

mapContainer.setSurfaceSize(surfaceContainer.width, surfaceContainer.height)

mapContainer.mapViewInstance?.apply {

addOnDidBecomeIdleListener { drawOnSurface() }

addOnWillStartRenderingFrameListener {

drawOnSurface()

}

}

runOnMainThread {

// Start drawing the map on the android auto surface

drawOnSurface()

}

}

private fun drawOnSurface() {

val mapView = mapContainer.mapViewInstance ?: return

val surface = surfaceContainer?.surface ?: return

val canvas = surface.lockHardwareCanvas()

drawMapOnCanvas(mapView, canvas)

surface.unlockCanvasAndPost(canvas)

}

Note the addOnWillStartRenderingFrameListener callback. Before drawing any frame, drawOnSurface is called, which ultimately draws the map on the Surface:

private fun drawMapOnCanvas(mapView: MapView, canvas: Canvas) {

val mapViewTextureView = mapView.takeIf { it.childCount > 0 }?.getChildAt(0) as? TextureView

mapViewTextureView?.bitmap?.let {

canvas.drawBitmap(it, 0f, 0f, null)

}

// snip code to draw attribution text

}

Performance

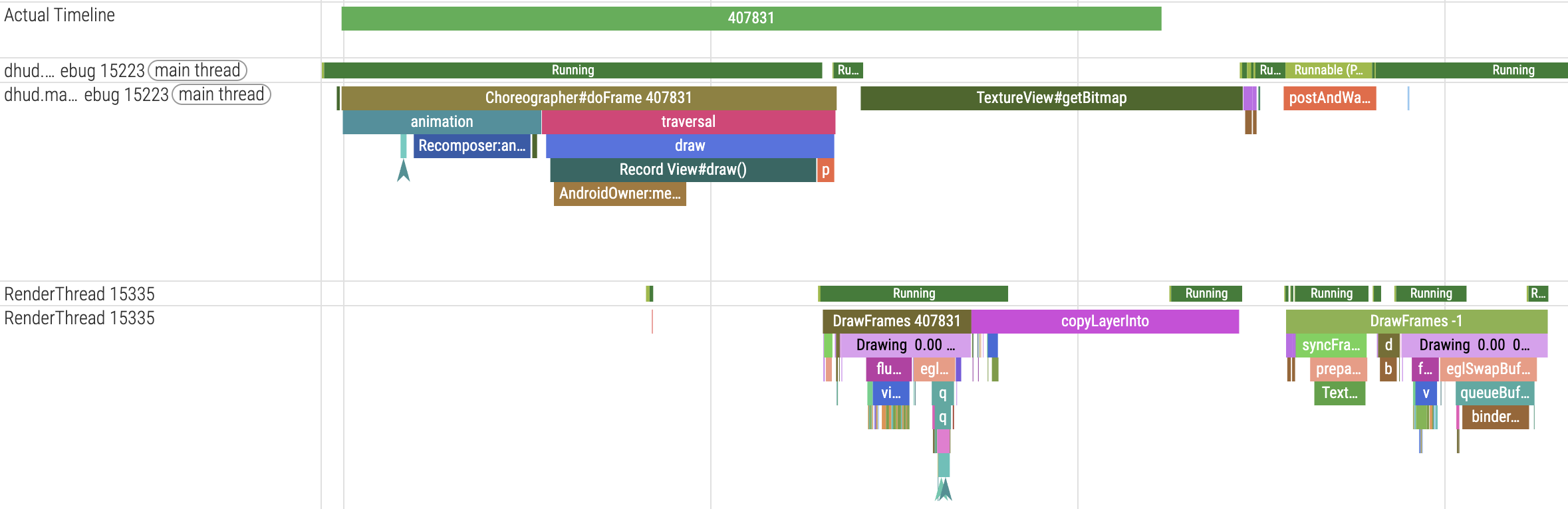

From looking at the code (and even from reading the comments within the README and code itself), this doesn’t seem very optimal. Indeed, recording a Perfetto trace1, we can see this pretty clearly:

| Jank Type | Min Duration | Max Duration | Mean Duration | Occurrences |

|---|---|---|---|---|

| Buffer Stuffing | 10.96ms | 24.59ms | 15,814,419.61 | 69 |

| App Deadline Missed | 13.51ms | 77.24ms | 32,131,886.56 | 25 |

| App Deadline Missed, Buffer Stuffing | 13.58ms | 50.21ms | 27,230,518.57 | 21 |

| None | 10.92ms | 19.44ms | 13,727,622.89 | 9 |

A quick definition of these, from the documentation, is as follows:

None

All good. No jank with the frame. The ideal state that should be aimed for.

Buffer Stuffing

This is more of a state than a jank. This happens if the app keeps sending new frames to SurfaceFlinger before the previous frame was even presented. The internal Buffer Queue is stuffed with buffers that are yet to be presented, hence the name, Buffer Stuffing. These extra buffers in the queue are presented only one after the other thus resulting in extra latency. This can also result in a stage where there are no more buffers for the app to use and it goes into a dequeue blocking wait. The actual duration of work performed by the app might still be within the deadline, but due to the stuffed nature, all the frames will be presented at least one vsync late no matter how quickly the app finishes its work. Frames will still be smooth in this state but there is an increased input latency associated with the late present.

App Deadline Missed

The app ran longer than expected causing a jank. The total time taken by the app frame is calculated by using the choreographer wake-up as the start time and max(gpu, post time) as the end time. Post time is the time the frame was sent to SurfaceFlinger. Since the GPU usually runs in parallel, it could be that the gpu finished later than the post time.

If we zoom in, we can see some of the issues causing this:

Every frame ends up drawing twice - first to the off screen map, and then via TextureView#getBitmap and the corresponding copyLayerInto, to Android Auto itself. If we estimate our “acceptable frames” as (None + Buffer Stuffing) / Total, we get ~62.9% successful frames across a 15 second window. We are able to get 124 frames rendered within this 15 second window, which is ~8.26 frames per second.

Direct Renderer

Around when we integrated the above method, we realized we needed something more performant. Fortunately, around then, an engineer from Grab opened a pull request introducing a more performant Android Auto solution that avoids the costs of the initial implementation.

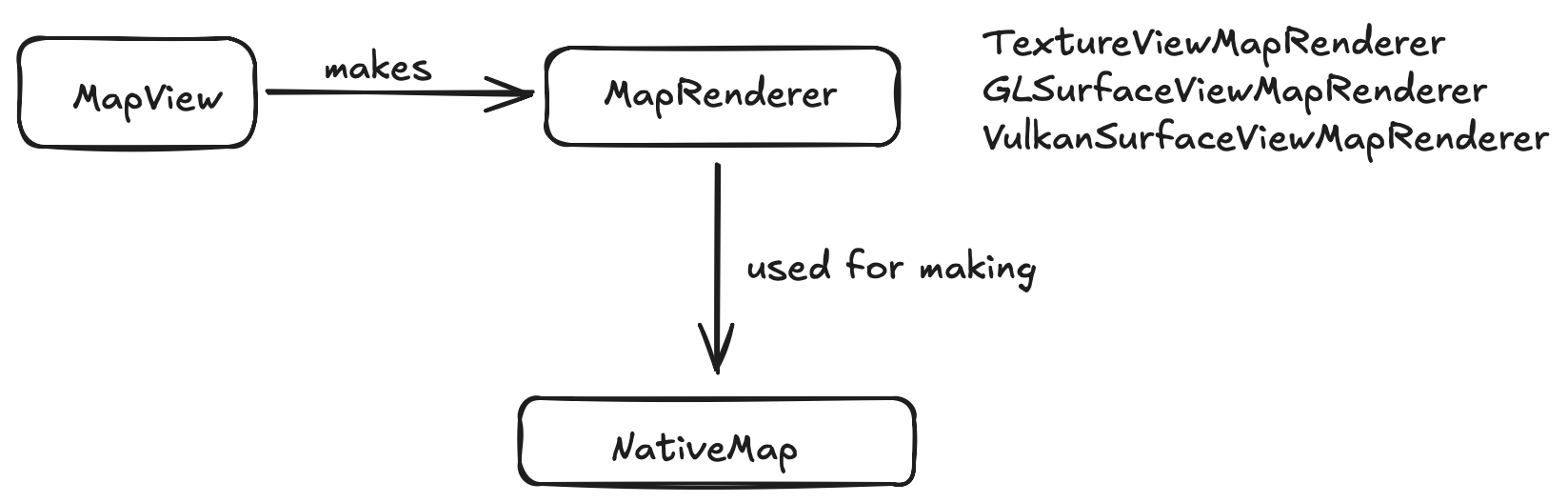

To understand how this works, we have to back up and see how MapView renders maps under the hood. MapLibre shares code between platforms using C++. The map drawing operations are exposed to Android via a NativeMap and a NativeMapView (contrary to the name, this is not an Android View). This class just consists mostly of jni calls.

The NativeMapView is wrapped by a MapLibreMap class and sends various commands directly to the C++ layer.

So how does the map get rendered? A NativeMapView requires a MapRenderer parameter that does the actual rendering. This renderer class has methods for when a surface is created, changed, or destroyed. There are three primary renderers on Android today - TextureViewMapRenderer (used by the original solution), and GLSurfaceViewMapRenderer (used by default today). The third is VulkanSurfaceViewMapRenderer (which, along with GLSurfaceViewMapRenderer, inherit from a shared SurfaceViewMapRenderer).

You’ll notice one thing - with the exception of MapView itself (and, perhaps, MapRenderer) - we don’t actually need android.view.* classes. In other words, we can build a MapRenderer that has no associated Android View, and replicate MapView, but as a vanilla plain Java/Kotlin class. This is, in summary, what the Grab patch does.

The Grab engineers make a SurfaceMapRenderer and SurfaceRenderThread, which are heavily based on the MapLibreGLSurfaceView and corresponding GLSurfaceViewMapRenderer, with the main exception that they are not Android Views.

Performance

We expect this approach to be better, and, indeed, it is.

| Jank Type | Min Duration | Max Duration | Mean Duration | Occurrences |

|---|---|---|---|---|

| Buffer Stuffing | 10.71ms | 27.43ms | 18,401,612.36 | 76 |

| None | 11.22ms | 18.98ms | 14,820,283.38 | 63 |

| App Deadline Missed | 15.04ms | 77.04ms | 37,400,813.83 | 18 |

| App Deadline Missed, Buffer Stuffing | 17.41ms | 39.63ms | 29,303,113.14 | 7 |

Using our back of the envelope “acceptable frames” calculation, we’re at around 84.7% acceptable frames across a 15 second window. We are able to render 164 frames across the 15 second window, which is roughly 10.93 frames per second.

Presentation Renderer

The last and final method we tried was actually mentioned in the developer documentation, but I completely missed it. It was not until sometime after writing my previous post about Compose without a View that made me realize this method could be used.

In summary, we:

- make a VirtualDisplay associated with the

Surfacegiven to us by Android Auto. - wrap that

VirtualDisplayin a Presentation:

A presentation is a special kind of dialog whose purpose is to present content on a secondary display. A Presentation is associated with the target Display at creation time and configures its context and resource configuration according to the display’s metrics.

- add our

MapViewto thePresentation - call

presentation.show().

Performance

Not surprisingly, these results were better than the first approach. What was surprising to me, however, is that the results for this were better than the “direct” approach discussed above as well:

| Jank Type | Min Duration | Max Duration | Mean Duration | Occurrences |

|---|---|---|---|---|

| None | 3.765ms | 20.76ms | 7,412,838.16 | 376 |

| Buffer Stuffing | 11.62ms | 58.24ms | 19,544,729.99 | 86 |

| App Deadline Missed | 6.176ms | 80.53ms | 27,729,510.59 | 51 |

| App Deadline Missed, Buffer Stuffing | 14.78ms | 49.08ms | 32,348,314.92 | 12 |

In this case, we are around 87.8% acceptable frames, and a much higher number of frames within that 15 second window. We render about 520 frames within this 15 second window, at around 34.66 frames per second.

Conclusion and Thoughts

Conceptually speaking, the “texture renderer” approach does double work - it draws once on the off screen view, and then copies a bitmap to actually draw to the Android Auto surface. The “direct” and “presentation” renderers both do half of the work, in the sense that they draw directly to the Android Auto surface.

Therefore, the fact that the texture renderer is slow was not surprising to me, nor was the fact that both the direct and presentation surface renderers were faster. What was, however, surprising to me, is that the direct surface renderer was only slightly faster than the texture renderer approach. My guess is that this is either due to a subtle bug in either the patch itself, our code that connects with the patch, or due to imperfect testing device conditions. I would have expected the direct rendering approach to be as performant as the presentation one (since it’s only doing the drawing once).

If we assume that the direct surface approach and the presentation approaches are similar in performance, there are several other reasons as to why to prefer the presentation approach:

- The direct integration approach has a lot of code to maintain. Grab’s PR adds 2238 lines of code.

- The direct integration approach is heavily tied to the internals of MapLibre. If a PR like this lives outside of MapLibre, it’s likely to break whenever internal contracts change.

- The direct integration approach makes sharing some pieces of code between an Android App and Android Auto more difficult. While most methods for manipulating the map are on

MapLibreMap, some methods are onMapViewdirectly. Methods that the application code calls onMapViewwould need to either be duplicated for Android Auto, or a wrapper needs to be built to delegate to the correct underlying method. - Drawing “on top of the map” needs OpenGL logic as opposed to vanilla Android ui logic.

Contrast that with the presentation approach:

- The presentation approach is much smaller - this pull request does it in ~71 lines of code.

- The presentation approach uses only public APIs that everyone uses -

MapView. - The presentation approach requires no special wrappers since it uses a

MapViewat the end of the day. - Drawing “on top of the map” is just a matter of adding the map within a

ViewGroupwith whatever else added on top.

I hope this was useful to someone, and if you have additional learnings on this, please do reach out and share!

-

Numbers are taken from running our app (not the sample app!) on a real Pixel 4a running Android 13 (yes, I realize this is a device from the 1960s, but that’s also what makes it a great test device!) Test data is with route simulation across a 15 second chunk, with app and route restarts between the tests. ↩︎