Pitfalls when working with KMP Strings

Jan 19, 2025 · 5 minute readcode

kotlinkmp

Alternative Title: “One cannot simply use string indices from Kotlin in Swift.”

One of the apps I am working on uses Kotlin Multiplatform for the business logic, and then has a specific Swift UI implementation for iOS and a Compose multiplatform implementation for other platforms. I built a fairly complex search feature that outputs a list of search results, with each result including the indices of the matched substrings to highlight in the text.

data class SearchResult(

val text: String,

val matches: List<IntRange>

)

Using this in Compose is pretty straight forward:

@Composable

fun SearchResultView(result: SearchResult) {

val annotatedString = AnnotatedString.Builder(result.text)

result.matches.forEach {

// +1 because addStyle's end is exclusive

annotatedString.addStyle(SpanStyle(color = Color.Red), it.first, it.endInclusive + 1)

}

Text(annotatedString.toAnnotatedString(), fontSize = 36.sp, textAlign = TextAlign.Center)

}

We can write a simple preview for it:

@Preview

@Composable

private fun SearchResultViewPreview() {

SearchResultView(

SearchResult(

text = "Salam, Kotlin Multiplatform",

matches = listOf(7..13)

)

)

}

… and we’d get what we expect:

We can also use it in Swift UI:

import SwiftUI

import SharedCode

struct SearchResultView: View {

let text: String

let ranges: [KotlinIntRange]

var body: some View {

Text(attributedString)

.font(.system(size: 36))

}

var attributedString: AttributedString {

var attributed = AttributedString(text)

for range in ranges {

if let startIndex = text.index(text.startIndex, offsetBy: range.start.intValue, limitedBy: text.endIndex),

let endIndex = text.index(text.startIndex, offsetBy: range.endInclusive.intValue, limitedBy: text.endIndex) {

if let attributedRange = Range(startIndex..<endIndex, in: attributed) {

attributed[attributedRange].foregroundColor = .red

}

}

}

return attributed

}

}

We can have a simple preview for it:

#Preview {

SearchResultView(

text: "Salam, Kotlin Multiplatform",

ranges: [KotlinIntRange(start: 7, endInclusive: 13)]

)

}

and again, we’d get what we expect:

Challenges

So all good, right? Ship it? Not quite. Let’s try with something a bit more interesting. We’ll change our SearchResult to:

SearchResult(

text = "يَبۡنَؤُمَّ",

matches = listOf(2..5)

)

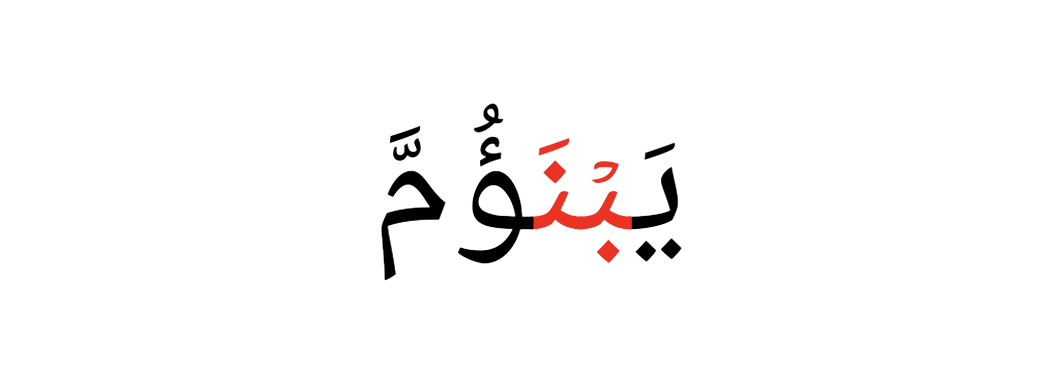

with this change, when we run on Android, we correctly see what we expect (increased the font size a bit to make it more clear):

applying the same change to iOS, we get:

These are not the same! What’s going on here? Let’s try to figure this out by writing some code to show us the characters at every index:

text.forEachIndexed { index, char -> println("text[$index] = $char") }

running this on Android, we get:

text[0] = ي

text[1] = َ

text[2] = ب

text[3] = ۡ

text[4] = ن

text[5] = َ

text[6] = ؤ

text[7] = ُ

text[8] = م

text[9] = َ

text[10] = ّ

on iOS, we can write something similar:

for (index, character) in text.enumerated() {

print("text[\(index)] = \(character)")

}

and we get something different:

text[0] = يَ

text[1] = بۡ

text[2] = نَ

text[3] = ؤُ

text[4] = مَّ

We notice that, on Android, each character has a slot, and the diacritics that are on top of the characters each have their own slot. On iOS, on the other hand, each slot contains a character with its diacritics, all in one slot.

In Apple’s documentation for String, we see the following:

Many individual characters, such as “é”, “김”, and “🇮🇳”, can be made up of multiple Unicode scalar values. These scalar values are combined by Unicode’s boundary algorithms into extended grapheme clusters, represented by the Swift Character type. Each element of a string is represented by a Character instance.

So how do we work around this? Swift provides a few different “views” on a String - a .utf8, .utf16, and .unicodeScalars. We are interested in .unicodeScalars:

for (index, scalar) in text.unicodeScalars.enumerated() {

print("text[\(index)] = \(scalar)")

}

This method gives us values that match what we get with Kotlin:

text[0] = ي

text[1] = َ

text[2] = ب

text[3] = ۡ

text[4] = ن

text[5] = َ

text[6] = ؤ

text[7] = ُ

text[8] = م

text[9] = َ

text[10] = ّ

What about .utf8? The utf-8 view gives us the individual bytes that make up each character in the string. For example, we see that text[0] is the character ي, which is U+064A. In utf-8, this is two bytes - D9 (217) and 0x8a (138), which correspond to the first two entries we get if we print the .utf8 view of the string.

Aside - in Kotlin, we can get the byte representation, similar to the .utf8 view in Swift, by using .encodeToByteArray():

val bytes = text.encodeToByteArray()

bytes.forEachIndexed { index, byte ->

println("text[$index] = ${byte.toUByte()}")

}

Note that the values of a byte are from -128 to 127, so we need to convert to an unsigned byte to get values that we’d expect between 0 and 255.

Solutions

With all this in mind, we now have a few potential solutions we can take to have consistent highlighting between Swift and Kotlin. The first option is to use the .unicodeScalars view in Swift.

struct SearchResultView: View {

let text: String

let ranges: [KotlinIntRange]

var body: some View {

Text(attributedString)

.font(.system(size: 64))

}

var attributedString: AttributedString {

var attributed = AttributedString(text)

for range in ranges {

if let startIndex = text.unicodeScalars.index(text.unicodeScalars.startIndex, offsetBy: range.start.intValue, limitedBy: text.unicodeScalars.endIndex),

let endIndex = text.unicodeScalars.index(text.unicodeScalars.startIndex, offsetBy: range.endInclusive.intValue, limitedBy: text.unicodeScalars.endIndex) {

if let attributedRange = Range(startIndex..<endIndex, in: attributed) {

attributed[attributedRange].foregroundColor = .red

}

}

}

return attributed

}

}

This solution is identical to our first solution, with the exception of the fact that we use text.unicodeScalars for the indices in place of just text. Because views on Swift Strings share indices, this range does what we expect when we apply it to the AttributedString.

Alternatively, instead of sending matches as a list of IntRanges, we can send back a list of Strings instead and use something like:

let match = "بۡنَ"

if let range = text.range(of: match,

range: text.startIndex..<text.endIndex) {

if let attributedRange = Range(range, in: attributed) {

attributed[attributedRange].foregroundColor = .red

}

}

Either of these solutions would have the same result, and we’d get the expected output on iOS: